How are the languages of the world related? This is the central question in the discipline of historical linguistics. In his MSc thesis, Peter Dekker studied how neural networks can help to reconstruct the ancestry of languages.

Read on...We try to understand the computational principles underlying natural language understanding by humans and machines. We investigate the neural implementation of these principles in the human brain, their evolutionary origins and their usefulness in language technology.

Our research

How are the languages of the world related? This is the central question in the discipline of historical linguistics. In his MSc thesis, Peter Dekker studied how neural networks can help to reconstruct the ancestry of languages.

Read on...

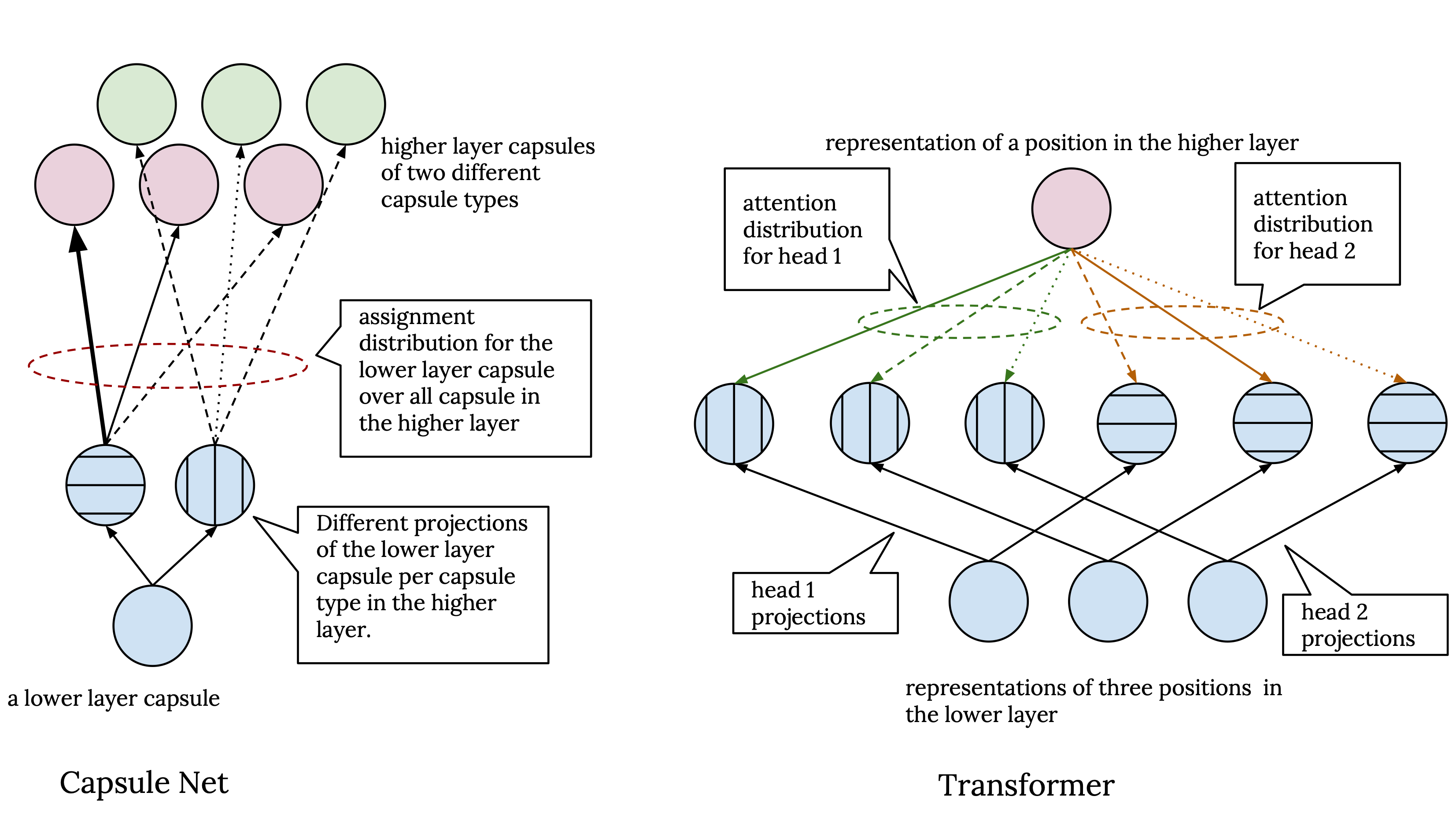

From Attention in Transformers to Dynamic Routing in Capsule Nets

In this post, we go through the main building blocks of transformers and capsule networks and try to draw a connection between different components of these two models. Our main goal here is to understand if these models are inherently different and if not, how they relate.

Read on...